Memory Machine for CXL Server Memory Expansion

New Memory Expansion Model

Servers with CXL 1.1 support offer a new groundbreaking architectural model that accommodates CXL Type 3 Add-in Cards (AICs) and E3.S devices for significantly lower cost and substantially increased capacity.

Server Memory Expansion Overview

Our Memory Machine for CXL provides IT organizations with the tools to utilize CXL fully. In DRAM-only environments, the Insights feature provides information about applications’ hot working set size, allowing application owners and architects to size new server memory and CXL configurations accurately. The Quality-of-Service (QoS) feature provides Latency and Bandwidth policies that improve application performance, enabling unmodified applications to run seamlessly on servers with DRAM and CXL. Intelligent memory management can boost utilization of precious GPU resources.

Key Features & Benefits

Observability

Memory Machine for CXL starts by providing valuable insights into server resource usage to help IT organizations understand if and how servers can benefit from CXL memory. Once in production, Memory Machine continues to report the performance of applications using mixed memory configurations.

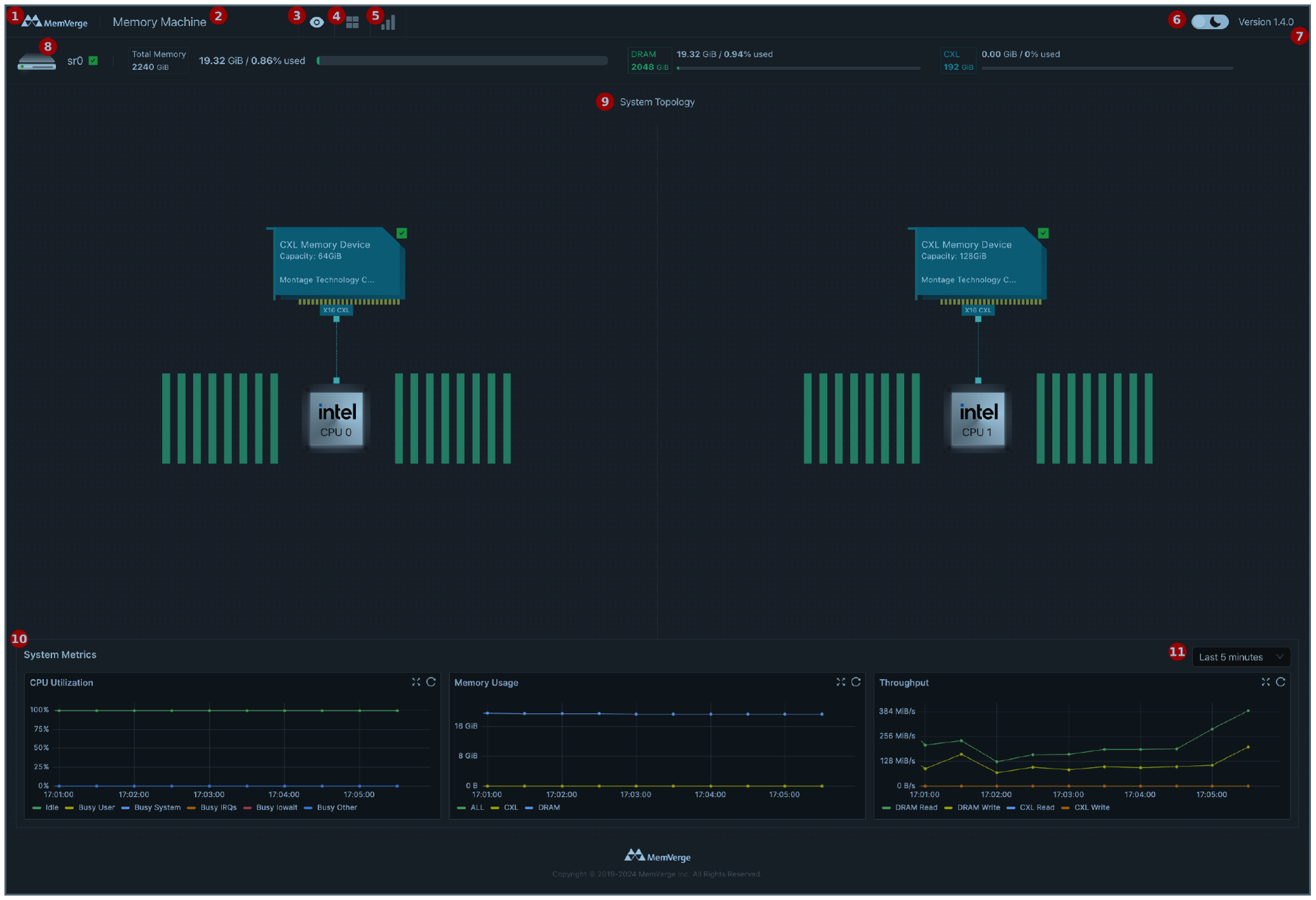

Memory Machine System Topology Dashboard

Legend: 1. Your company logo, 2. MemVerge product name, 3. Dashboard & system topology menu, 4. Quality of Service (QoS) menu item, 5. Insights menu item, 6. Light or dark UI mode selector, 7. MemVerge product version, 8. System information, 9. System topology, 10. System metrics, 11. System metrics date/time selector

Intelligent Tiering of Mixed Memory

Memory Machine for CXL makes it possible for IT organizations to scale memory in a server to 32TB at half the cost without sacrificing application performance. The Server Memory Expansion software adapts to varying workloads with intelligent placement policies and memory page movement to optimize latency or bandwidth.

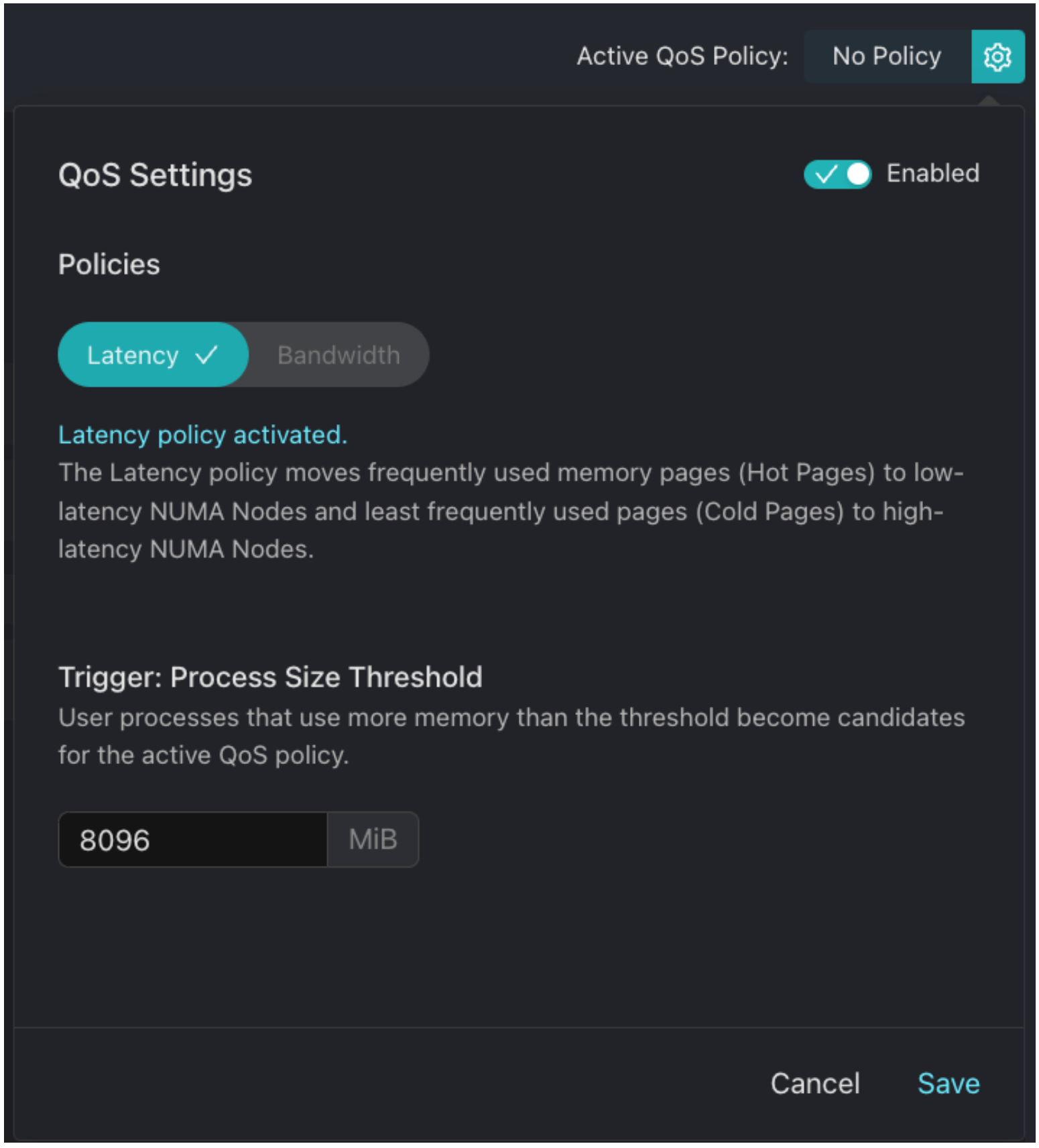

Latency Policy

Latency tiering intelligently manages data placement across heterogeneous memory devices to optimize performance based on the “temperature” of memory pages, or how frequently they are accessed.

The MemVerge QoS engine moves hot pages to DRAM, where they can be accessed quickly. Cold are placed in CXL memory.

By ensuring that frequently accessed data is stored in DRAM, the system reduces the average latency of memory accesses, leading to faster application performance.

See how intelligent tiering with a latency policy was used to improved performance of MySQL based on TPC-C benchmark tests and how GPU utilization was boosted by 77%.

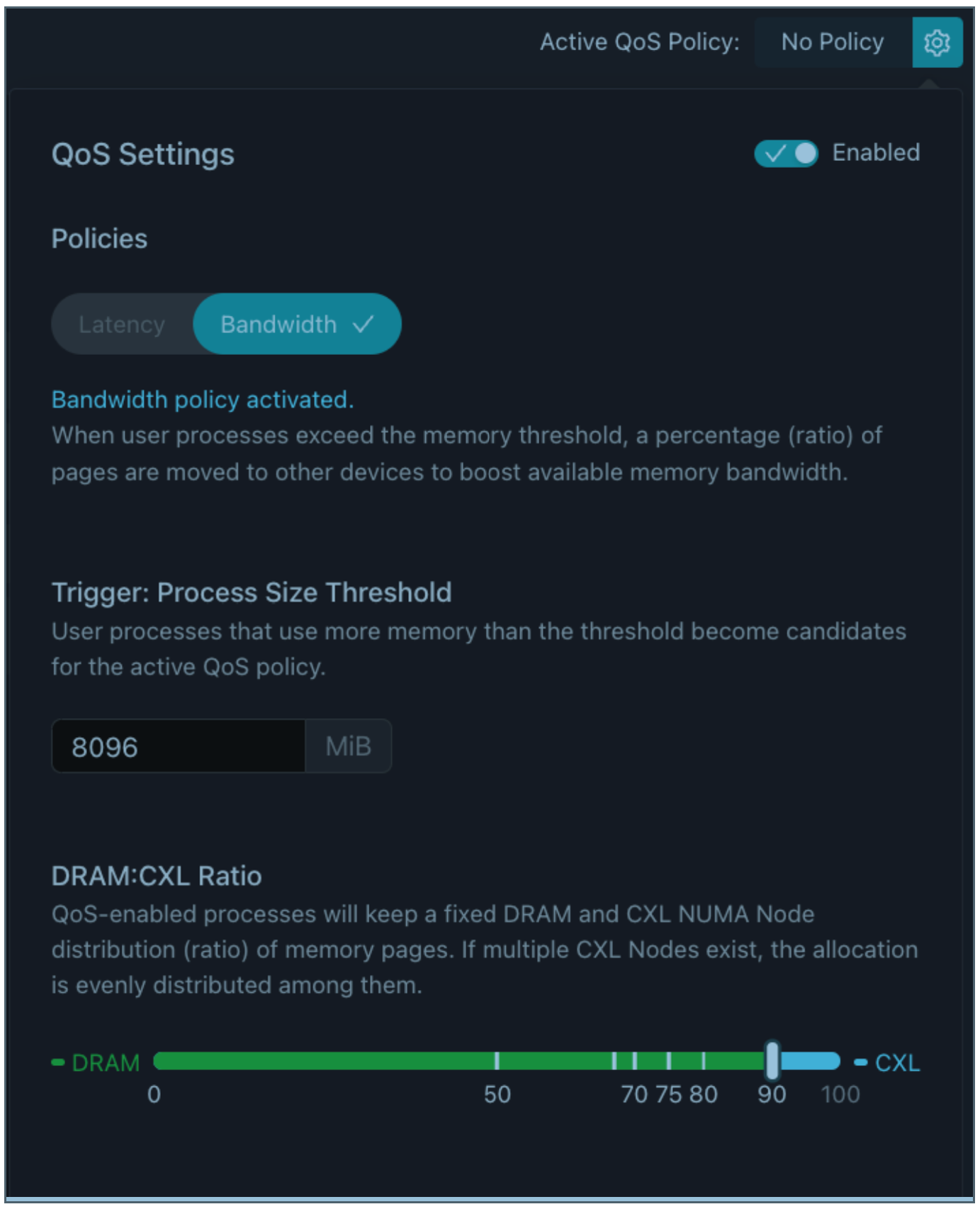

Bandwidth Policy

The goal of bandwidth-optimized memory placement and movement is to maximize the overall system bandwidth by strategically placing and moving data between DRAM and CXL memory based on the application’s bandwidth requirements.

The bandwidth policy engine will utilize the available bandwidth from all DRAM and CXL memory devices with a user-selectable ratio of DRAM to CXL to maintain a balance between bandwidth and latency.

See how intelligent tiering with a bandwidth policy was used to improve vector database performance based on Weaviate benchmark tests.