Memory Machine™ AI

Memory Machine™ AI

Surfing GPUs for continuous optimization

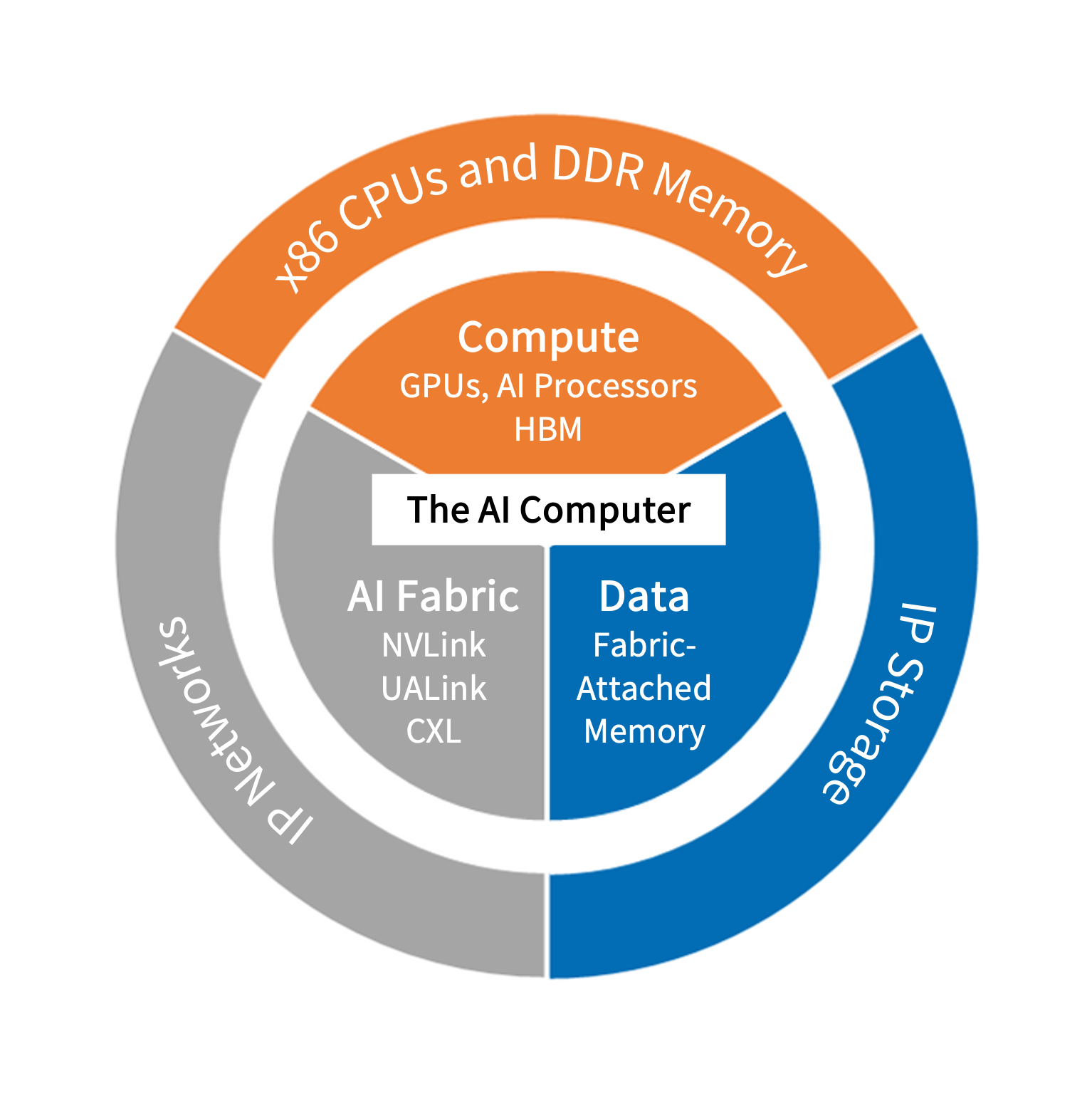

Computing in the AI Era

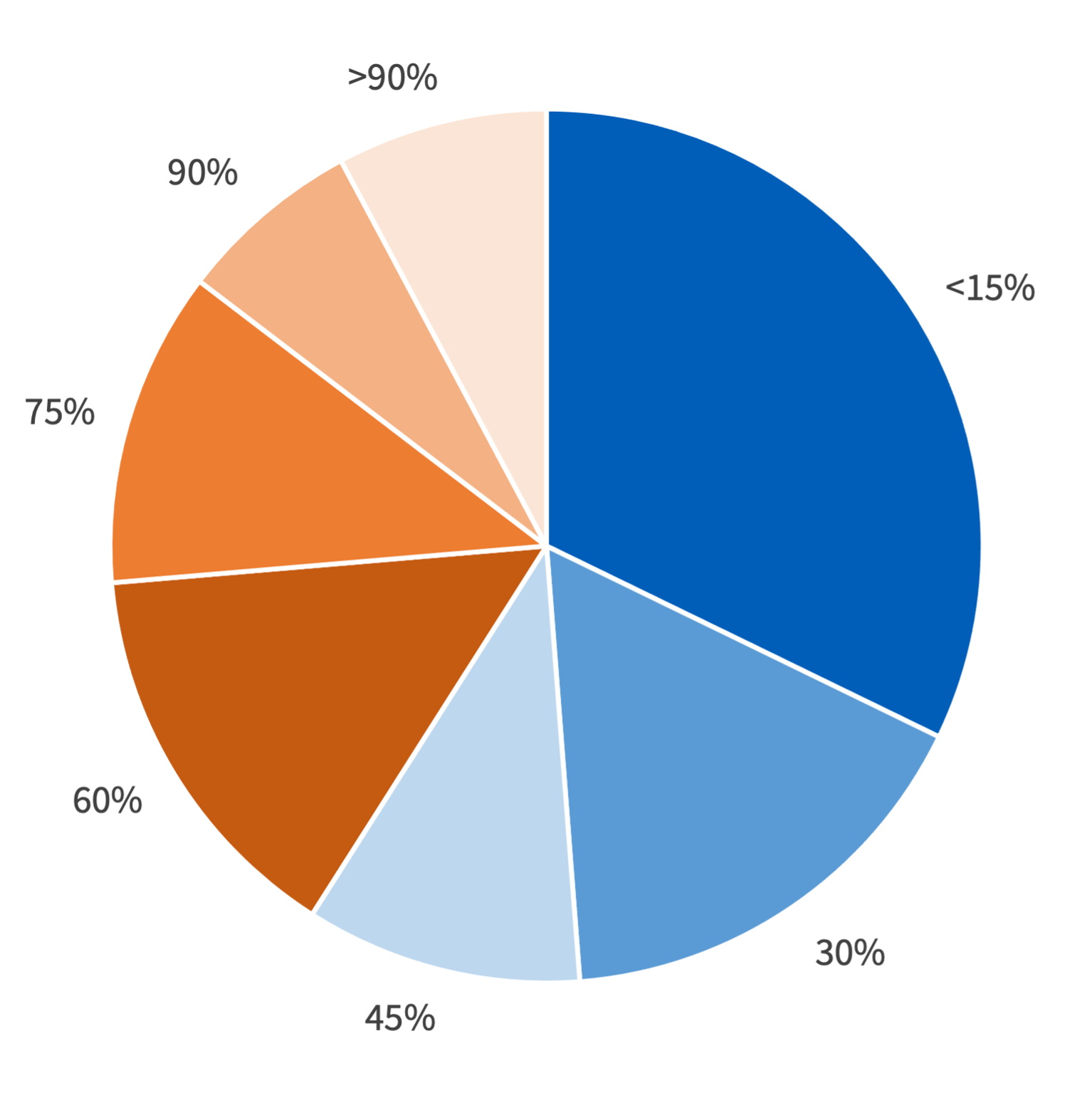

In the new era of GPU-centric computing for AI, average GPU utilization is less than 15% for a third of wandb survey respondents and less than 50% for over half of respondents. That’s why efficient utilization of GPU clusters is crucial for Enterprises to maximize their return on investment.

Memory Machine for AI

Memory Machine for AI addresses challenges of the AI era and GPU utilization head-on. Designed specifically for AI training, inference, batch, and interactive workloads, the advanced software allows your workloads to surf GPU resources for continuous optimization.

Serving GPU on-demand, Memory Machine ensures your clusters are fully utilized, delivering GPU-as-a-Service for superior performance, security, user experience, and cost savings.

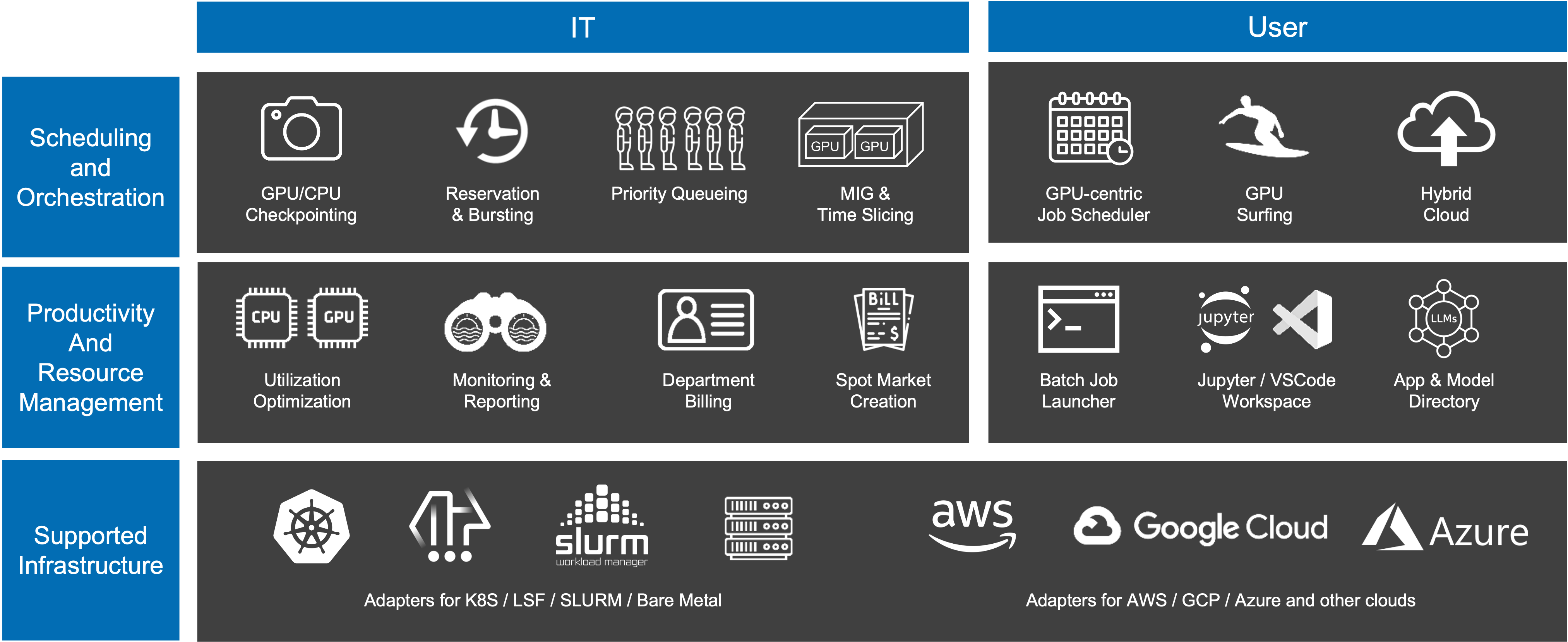

Memory Machine for AI

Memory Machine for AI features a suite capabilities essential to IT pros and users involved in deploying cluster infrastructure and AI applications

Key Features & Benefits

GPU Surfing

Ensure uninterrupted job execution by transparently migrating user jobs to available hardware resources when the original GPUs become unavailable, maintaining continuous operation and maximizing resource efficiency.

Automatically Suspend and Resume Jobs Transparently

Seamlessly move user jobs across the AI Platform, safeguard against out-of-memory conditions, and prioritize critical tasks by automatically suspending and resuming lower-priority jobs, ensuring uninterrupted and efficient resource management.

Optimal GPU Utilization

Intelligent GPU sharing algorithms eliminate idle resources and maximize utilization.

Intuitive User Experience

Easy-to-use UI, CLI, and API for seamless workload management. The user interface provides proactive monitoring and optimization.

Intelligent Job Queueing & Scheduling

Optimizes user jobs for the available hardware by employing a variety of advanced scheduling policies and algorithms to ensure maximum efficiency and performance.

Flexible GPU Allocations

Optimize resource utilization by dynamically reallocating idle GPUs from other projects, ensuring efficient use of available hardware and minimizing downtime.

Optimized for NVIDIA GPUs

Leverage advanced NVidia GPU capabilities for superior performance and efficiency with tailored optimizations that maximize the potential of NVidia hardware in AI/ML workloads.

Granular Resource Assignment

Partition your infrastructure into specific departments and projects to ensure precise and optimal allocation of resources, maximizing efficiency and reducing waste.

Comprehensive Workload Support

Accommodate diverse user workloads, including Training, Inference, Interactive, and Distributed tasks, with an integrated application and model directory that stores existing and customized Docker images in a secure private repository for streamlined deployment and management.

Extensive Infrastructure Support

Seamlessly integrate with diverse infrastructure environments, including Kubernetes, LSF, SLURM, bare metal, and public cloud platforms such as AWS, GCP, Azure, and more, providing unparalleled flexibility and scalability for AI/ML workloads.

Cloud Bursting

Enable seamless scheduling of user jobs on public cloud resources, ensuring continuous operation and scalability without compromising performance.