Memory Wall, Big Memory, and the Era of AI

By Charles Fan, CEO

In the fast-evolving landscape of artificial intelligence (AI), where models are growing larger and more complex by the day, the demand for efficient processing of vast amounts of data has ushered in a new era of computing infrastructure. With the advent of transformer models, Large Language Models (LLM), and generative AI, the reliance on matrix computation across extensive tensor datasets has become paramount. This shift has propelled GPUs and other AI processors into the spotlight, as they boast increasing power to handle large-scale matrix multiplications efficiently.

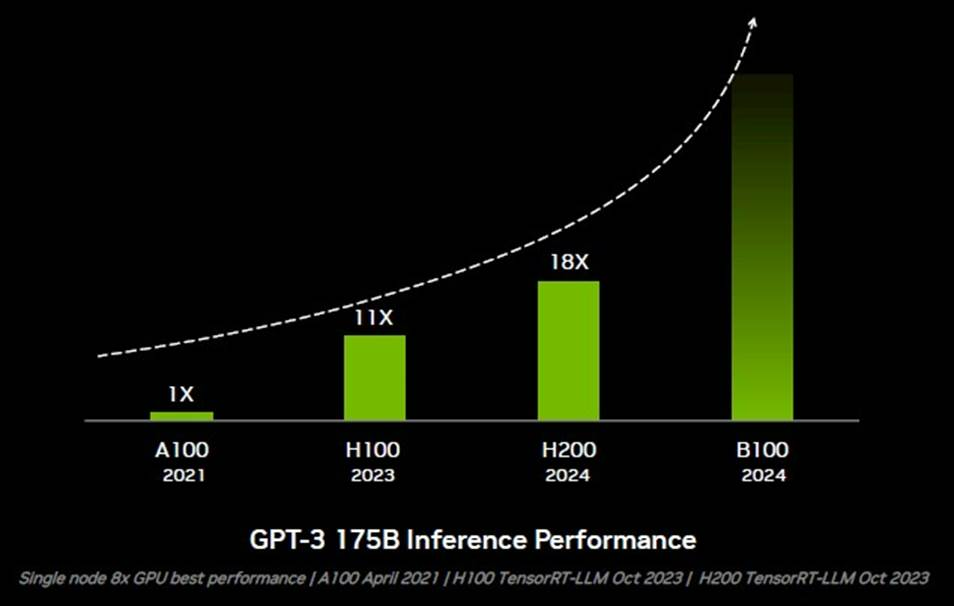

However, as AI models balloon in size, a critical bottleneck emerges: the limitation of high-bandwidth memory (HBM) accessible to these processors and the constrained bandwidth of the interconnecting fabric between them. Today’s mammoth models, often comprising hundreds of billions or even trillions of weights, demand an exorbitant amount of memory – ranging from hundreds of gigabytes to terabytes – for both training and inference tasks. Despite the exponential growth in GPU processing power, the rate of expansion in high-bandwidth memory on GPUs has been comparatively modest, leaving many models unable to fit entirely within the memory of a single GPU. This problem is commonly referred to as the “memory wall”.

“…it looks to our eye like we can expect a lot more inference performance, and we strongly suspect this will be a breakthrough in memory, not compute, as the chart below suggests that was meant to illustrate the performance jump…”

– The Next Platform

To address this challenge, the concept of Big Memory Computing has come to the fore. Big Memory Computing encompasses a suite of technologies aimed at scaling memory both vertically and horizontally, thus expanding the capacity of memory systems to accommodate the burgeoning needs of AI workloads. Horizontal scaling involves distributing tasks across multiple GPUs, necessitating various parallelism techniques to shard data between them. However, this approach often incurs significant data transfer overhead between GPUs, leading to suboptimal GPU utilization and slower overall performance.

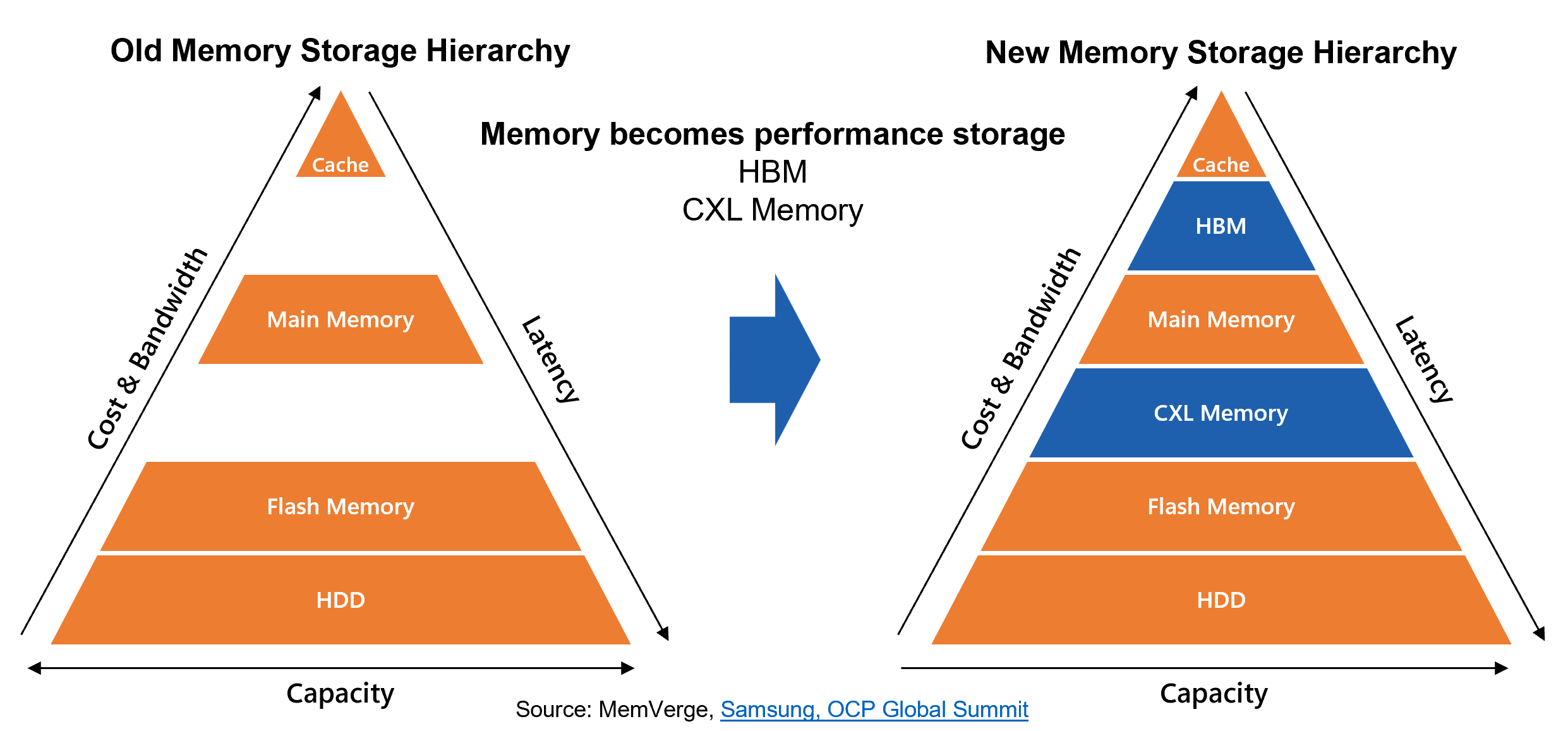

Conversely, vertical scaling focuses on extending memory capacity by introducing additional tiers of memory, such as main system memory or CXL memory, to complement the High Bandwidth Memory on GPUs. While less prevalent, vertical scaling holds promise, especially with ongoing software and hardware innovations aimed at enhancing its performance and feasibility.

Central to the realization of Big Memory Computing is the intelligent optimization of the memory-storage hierarchy within AI infrastructure. Technologies such as shared memory enable multiple processors on different server nodes to access the same memory region, facilitating memory sharing and reducing communication overhead for horizontal scaling of GPU memory. Furthermore, intelligent memory tiering and offloading, along with multi-node memory sharing technologies, are poised to play a pivotal role in maximizing GPU utilization and enhancing overall system performance.

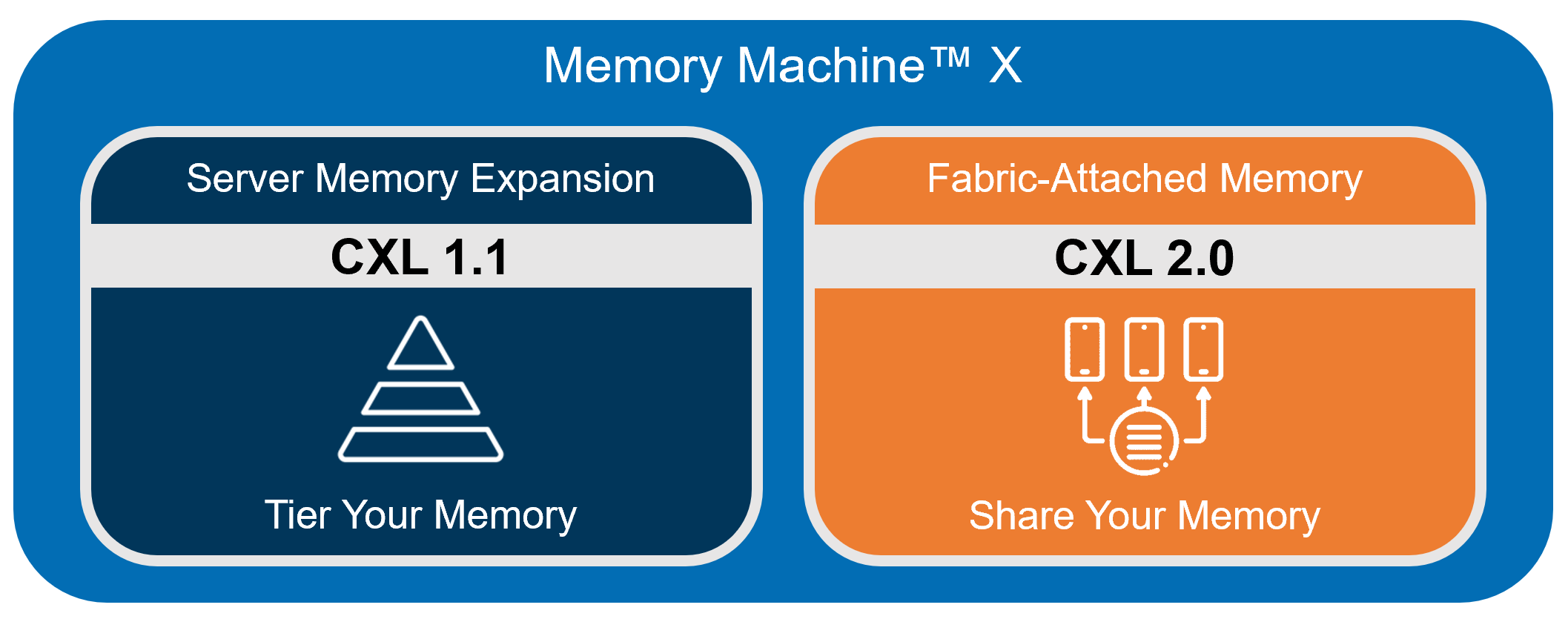

A company at the forefront of Big Memory Computing is MemVerge, which has dedicated six years to developing cutting-edge technologies in this domain. Our flagship product, Memory Machine for CXL, incorporates best-of-breed memory tiering and memory sharing technologies, offering a robust solution for AI use cases.

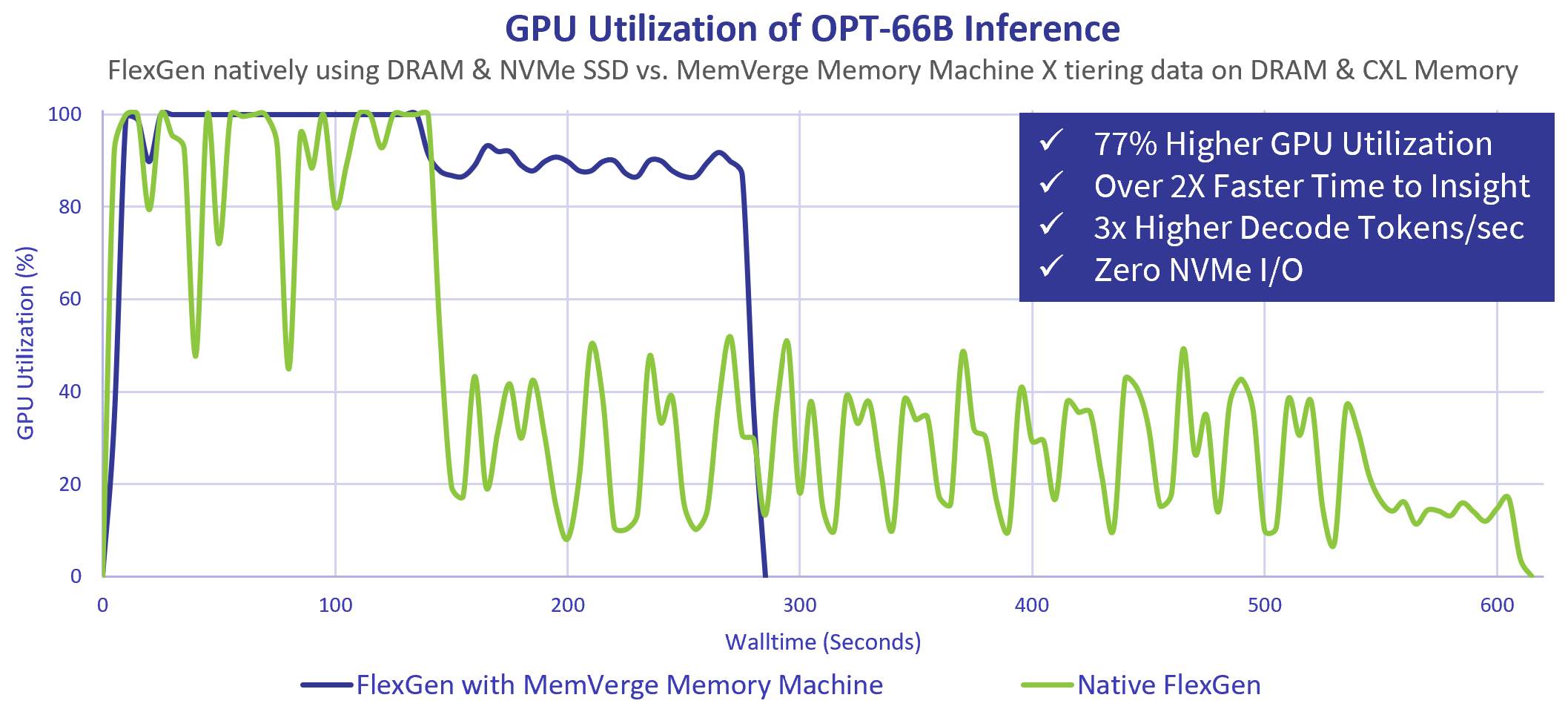

One example is a groundbreaking joint solution from MemVerge and Micron that leverages intelligent tiering of CXL memory, boosting the performance of large language models (LLMs) by offloading from GPU memory to CXL memory. The chart below shows the use of intelligently tiered memory increases the utilization of precious GPU resources by 77% while more than doubling the speed of OPT-66B batch inference.

In conclusion, the growth in size and complexity of AI models is driving the need for innovative solutions to overcome the memory wall; Big Memory Computing answers the call with solutions that unlock the full potential of AI with vertical and horizontal memory scaling, coupled with intelligent optimization techniques; and MemVerge stands ready to leverage its expertise in Big Memory Computing and CXL to help prospective clients tackle the intricate challenges presented by the era of AI.