What Does DeepSeek Mean for Enterprise AI?

Since OpenAI introduced ChatGPT in December 2022, the world has been swept up in the wave of Generative AI. Enterprises are now actively exploring how to leverage AI to increase productivity, streamline operations, and gain a competitive edge in an increasingly digital-first world. Software development, IT management, and customer support were among the first to feel the impact.

Killer Apps for Generative AI

Software

Development

IT

Management

Customer

Support

As enterprises began to adopt these AI solutions, a critical challenge emerged: the handling of proprietary data. Many businesses operate with sensitive or confidential information that they are unwilling to share with third-party API providers. This concern is particularly acute in industries like healthcare, finance, and legal services, where data privacy and security are paramount. Solutions like Retrieval Augmented Generation (RAG) do not fully address this problem.

Luckily, we are witnessing a significant acceleration in open-source innovation. This week, DeepSeek’s release of its R1 models has garnered attention for their ability to achieve results similar to ChatGPT-o1 while using far less computational power for training. DeepSeek is open source and open weights, which will lead to further innovation and optimization of others in the open source community in the coming months. These advancements are making it increasingly feasible for enterprises to fine-tune open-source models using their own proprietary data and deploy them in their own environments, solving the data privacy and security problems, while possibly enjoying better cost efficiency.

The infrastructure supporting these AI workloads is also undergoing a significant transformation. Traditional data centers, built around X86-centric architectures, are being reimagined to accommodate the unique demands of AI. At the heart of this shift is the move toward GPU-centric architectures, which are better suited to handle the parallel processing requirements of AI workloads.

However, this transition is not without its challenges. Unlike traditional CPUs, GPUs lack a mature software layer for virtualization, resource pooling, and sharing. This creates a critical gap in the ability to efficiently allocate and manage GPU resources across different users and workloads within an enterprise. As AI workloads grow in complexity and scale, this gap becomes increasingly apparent, necessitating a new approach to AI infrastructure management.

With the rise of enterprise AI workloads and the emergence of GPU-centric data centers, there is a pressing need for a new layer of software that can automate the provisioning, scaling, and management of resources. This software layer, which we call AI Infra Automation Software, is designed to address the unique challenges of deploying and managing AI workloads in modern data centers. The diagram on the right shows that AI Infra Automation software fits between Enterprise AI workloads and data center hardware.

New Class of AI Infra Automation Software

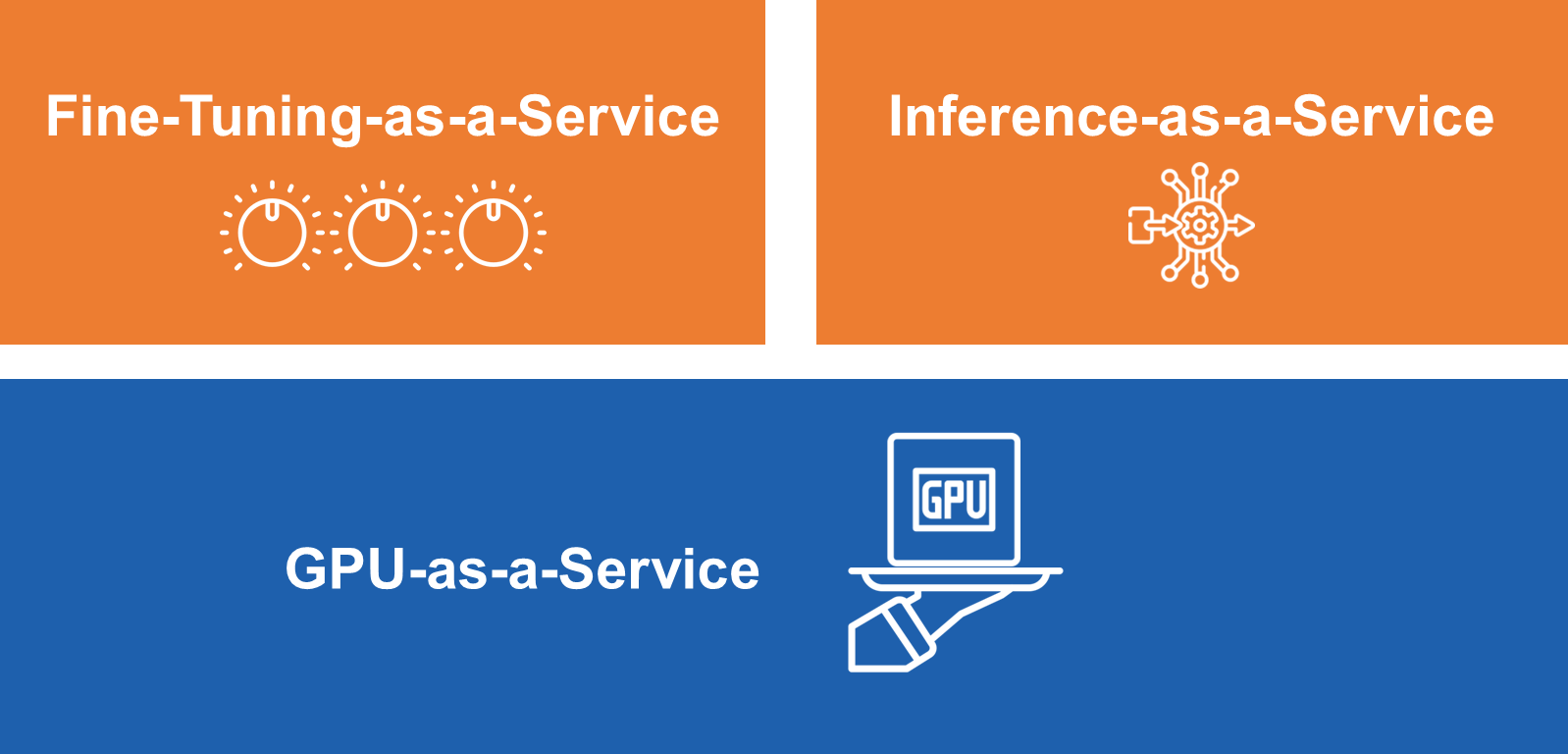

At its core, AI Infra Automation Software consists of three key components:

Enterprise GPU-as-a-Service Software

This foundational layer manages GPU clusters, enabling efficient pooling and sharing of GPU resources. It automatically maps these resources to AI workloads based on demand, ensuring optimal utilization and performance.

Inference-as-a-Service Software

Built on top of the GPU-as-a-Service layer, this component automates the deployment of popular AI models, such as Llama or DeepSeek, for enterprise users. It also supports auto-scaling, dynamically adjusting the number of model-serving nodes as demand fluctuates.

Fine-tuning-as-a-Service Software

This layer provides AI developers with the tools they need to experiment with, fine-tune, and test AI models. It simplifies the process of customizing models to incorporate proprietary data or to meet specific business needs, accelerating the development cycle.

AI Infra Automation Software Components

Together, these components form a comprehensive solution for automating enterprise AI infrastructure, enabling businesses to focus on innovation rather than infrastructure management.

Over the past year, the MemVerge engineering team has been hard at work developing AI Infra Automation Software. Our first version of Memory Machine AI software will be available through our Pioneer Program in February. This initial release focuses on enabling platform engineering teams to establish an enterprise-grade GPU-as-a-Service. By providing robust management capabilities for GPU clusters, Memory Machine AI lays the groundwork for efficient resource allocation and utilization. You can learn more about its features and capabilities by watching the MemVerge presentation at AI Field Day.

MemVerge Presentations at AI Field Day 6

Supercharging AI Infra

Charles Fan

CEO and Co-founder, MemVerge

Memory Machine AI GPU-as-a-Service

Steve Scargall

Director of Product Management, MemVerge

Transparent Checkpointing for AI

Bernie Wu

Vice-President of Strategic Partnerships, MemVerge

Fireside Chat

Steve Yatko

CEO and Founder, Oktay Technology

While GPU-as-a-Service is a critical first step, it is just the beginning of our journey to automate enterprise AI infrastructure. In the coming months, we will be rolling out Inference-as-a-Service and Fine-tuning-as-a-Service capabilities, further enhancing the ability of enterprises to deploy and customize AI models at scale. These advancements will empower businesses to deploy new open source models with a single click, to auto-scale model deployment to meet the demand from fluctuating demands, and to accelerate the AI development and fine-tuning.

Memory Machine AI Dashboard

Memory Machine for AI includes GPU configuration and real-time utilization telemetry needed for automated optimization, as well as department usage data that can be used for billing.

The rapid improvement in open source AI models, the transformation of AI data centers and the emergence of AI Infra Automation Software represent a pivotal moment in the evolution of enterprise AI. As businesses continue to embrace AI to drive innovation and competitiveness, the need for efficient, scalable, and secure infrastructure will only grow. At MemVerge, we are committed to leading this transformation by delivering cutting-edge solutions that automate and simplify AI infrastructure management. With Memory Machine AI and our upcoming offerings, we aim to empower enterprises to unlock the full potential of AI, enabling them to innovate faster and smarter than ever before. 2025 will be the year of Enterprise AI, and AI Infra Automation Software will be a catalyst.