Memory Machine™ for Kubernetes

Introducing MemVerge Memory Machine for Kubernetes Beta

A game-changer for Site Reliability Engineers and DevOps professionals. Harness high-performance memory snapshot capabilities for hot restarts of stateful apps and pods, with minimal overhead and no changes to your existing Kubernetes setup. Seamlessly preserve application and machine memory states, enhancing current Kubernetes Pod Migration mechanisms. See the benefits for yourself by joining our early adopter program.

This is for You, if You’re:

Machine Learning

Data Scientists and Machine Learning Engineers utilize Memory Machine for Kubernetes to streamline the deployment, scaling, and management of ML pipelines to avoid loosing development time by ensuring consistent environments.

Site Reliability Engineers

Site Reliability Engineers (SREs) leverage Memory Machine for Kubernetes to maintain high availability of services, automate failover mechanisms, and ensure consistent deployments to avoid costly downtime for stateful applications.

Developer Operations

DevOps Engineers use Memory Machine for Kubernetes to automate the deployment, scaling, and management of applications, fostering continuous integration and continuous delivery (CI/CD) practices.

IT Managers

IT Managers and Infrastructure Architects adopt Memory Machine for Kubernetes to immediately restart pods, which optimizes IT infrastructure costs by efficiently utilizing resources.

Why You Will Love This

Kubernetes presents a transformative approach to IT cost optimization, especially for stateful applications running on discounted spot instances and when transitioning from legacy VM platforms. However, K8s’ high frequency of pod kills and evictions makes it challenging to operate non-fault-tolerant stateful applications that were never designed for frequent cold restarts. This results in lower user productivity, lower resource utilization, and higher administrative costs.

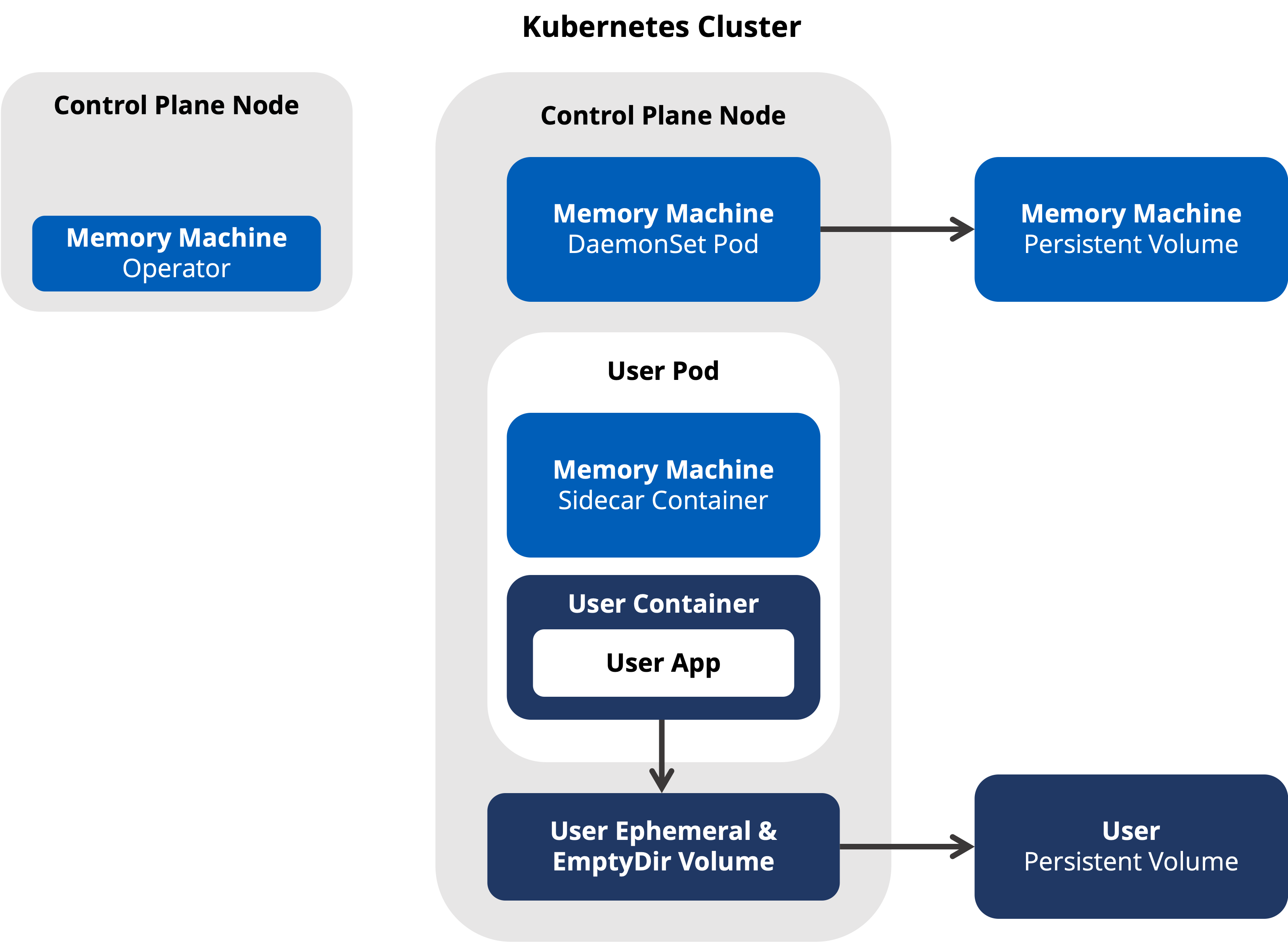

Memory Machine for Kubernetes Operator is designed to address these type of operational challenges by enabling stateful applications to gracefully resume from the point where they were interrupted.

Memory Machine for Kubernetes will automatically checkpoint the node and gracefully migrate the running application state to the new node without loss of previous work or the need for a cold restart. By leveraging Memory Machine for Kubernetes’ infrastructure-as-code and auto-scaling capabilities, organizations can streamline operations, minimize manual errors, and significantly cut IT costs, all while enhancing system performance and dependability.

Cut Cluster Costs

Stateful Apps on Kubernetes

Kubernetes presents a transformative approach to IT cost optimization, especially for stateful applications running on discounted spot instances and when transitioning from legacy VM platforms. Spot instances, with their dynamic pricing, coupled with Kubernetes’ ability to ensure application reliability and seamless recovery, offer substantial savings. Simultaneously, migrating from VMs to Kubernetes-managed containers results in efficient resource utilization, reduced management overhead, and flexible scaling. By leveraging Kubernetes’ infrastructure-as-code and auto-scaling capabilities, organizations can streamline operations, minimize manual errors, and significantly cut IT costs, all while enhancing system performance and dependability.

- Node Draining for Maintenance: Memory Machine for Kubernetes will periodically drain nodes and transfer running stateful apps to other nodes in order apply software patches and perform other maintenance. Memory Machine for Kubernetes will automatically checkpoint the node and gracefully migrate the running application state to the new node without loss of previous work or the need

for a cold restart. - Public Cloud Cost Optimization Using Spot Instances: Run Kubernetes nodes with stateful apps on Spot Instances to save up to 70% while automatically hot restarting any pre-empted pods on alternative instances.

- Seamless Recovery: Memory Machine for Kubernetes Operator can be used and configured in conjunction with other spot instance operators to ensure a hot restart of a pod elsewhere after an instance has been pre-empted.

Business Continuity & Developer Productivity

Auto Scale Complex K8 Stateful Apps:

Memory Machine for Kubernetes offers a modern, efficient, and cost-effective alternative to legacy infrastructure platforms. By harnessing its capabilities for stateful applications on Kubernetes and transitioning away from older monolithic architecture models, organizations can realize substantial IT cost savings while enhancing performance, reliability and scalability.

- Efficient Resource Utilization: Kubernetes by design, are lightweight compared to traditional . By migrating stateful applications from traditional infrastructure to the Memory Machine for Kubernetes-managed containers, organizations can achieve higher density, running more applications on the same hardware or cloud resources, translating to direct cost savings.

- Infrastructure as Code: The Memory Machine for Kubernetes allows for defining infrastructure as code, streamlining and automating many deployment and scaling tasks. This not only reduces manual intervention and associated labor costs but also minimizes errors that might arise from manual operations.

- Reduced Overhead: Legacy infrastructure platforms often come with significant management overhead, both in terms of human resources and licensing costs. The Memory Machine for Kubernetes, being open-source, eliminates many of these costs, and its declarative nature simplifies management, reducing the need for specialized personnel.

- Flexibility & Scalability: The Memory Machine for Kubernetes offers auto-scaling based on workload demands, ensuring optimal resource allocation. Use the MMK Operator in conjunction with horizontal scaling for automated bin-packing while maintaining business continuity of stateful applications. Avoid OOM kills and over-provisioning by automatically resizing pod CPU & memory requests based on application usage. Seamlessly migrate a pod to another node when there are not enough resources on the original node. This means organizations only use (and pay for) the compute resources they genuinely need.