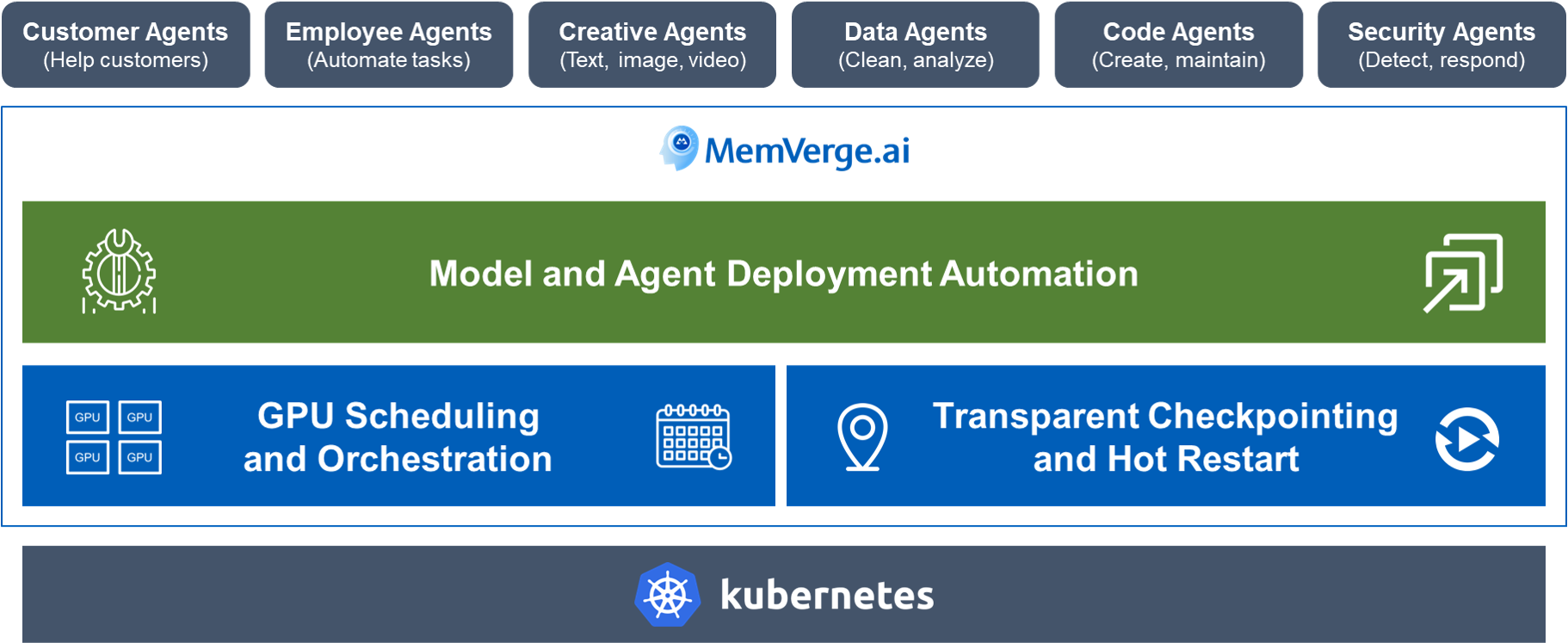

Optimize enterprise GPU orchestration, automate AI model and agent deployment

For MLOps, IT pros, and app developers responsible for optimizing AI infrastructure, MemVerge.ai is an operating system for AI infra that accelerates the deployment of workloads and streamlines their management on-prem and in the cloud. The results are quick time-to deployment, higher GPU utilization, and faster job completion.

Key Capabilities

Model and Agent Deployment Automation

Simplify deployment of open-source Large Language Models for inference and fine-tuning. Securely auto-scale infrastructure for multiple agents, multiple models and RAG systems that capture proprietary enterprise data.

GPU Scheduling & Orchestration

Eliminate idle resources and maximize utilization with intelligent GPU sharing algorithms. Boost user job performance for available GPU with advanced scheduling policies. Optimize resource allocation with partitioning of GPU infrastructure by departments and projects.

Transparent Checkpointing and Hot Restart

Seamlessly move user jobs across your AI platform, safeguard against out-of-memory conditions, and prioritize critical tasks by automatically suspending and resuming lower-priority jobs, ensuring uninterrupted and efficient resource management.

Major Benefits

3x

Faster time to deployment

5x

Higher GPU Utilization

0

Job Restarts from Beginning

AI Field Day 6

Why MemVerge Fits Into Your Environment

MemVerge Presentations

Charles Fan

CEO and Co-founder

MemVerge

CEO and Co-founder Charles Fan provides an overview of large language models (LLMs), agentic AI applications, and workflows for AI workloads. He then delves into the influence of agentic AI on technology in the data center, with a particular focus on software for AI infrastructure.

Steve Scargall

Director of Product Management

MemVerge

Director of Product Management Steve Scargall introduces Memory Machine AI software from MemVerge. He explains how platform engineers, data scientists, developers & MLOps engineers, decision-makers & project leads can use the software to optimize GPU usage with strategic resource allocation, flexible GPU sharing, real-time observability & optimization, and priority management.

Bernie Wu

Vice-President of Strategic Partnerships

MemVerge

Vice-President of Strategic Partnerships Bernie Wu explains the limitations of current checkpointing technology for AI and how transparent checkpointing, in the form of a MMAI K8S operator, that can address the challenges by efficiently pausing and/or re-locating long-running GPU workloads without application changes or awareness.