Memory Machine Cloud

Solutions

Easily Deploy Jupyter in the Cloud, Improve Performance and Optimize Costs

I use Jupyter, is Memory Machine for me?

Jupyter Notebooks are one of the most popular scientific computing tools used to help ensure procedures and analysis are documented, codified, and can be reproduced. Larger scientific computations, data gravity, and a need to easily share and collaborate across remote teams are common drivers for moving Jupyter environments to the cloud. Memory Machine is a cloud automation platform that helps you quickly deploy a scalable Jupyter environment in the cloud. Once deployed, Memory Machine provides advanced automation capabilities to help you maximize performance and optimize your cloud spend.

Stop Managing Cloud Resources, Do More Science

It’s free to get started and within minutes Memory Machine can be installed in your own AWS or GCP cloud environment. Using Memory Machine to deploy and manage Jupyter environments can be done in two ways: through our CLI language called FLOAT and via an intuitive web-based GUI.

Memory Machine Batch

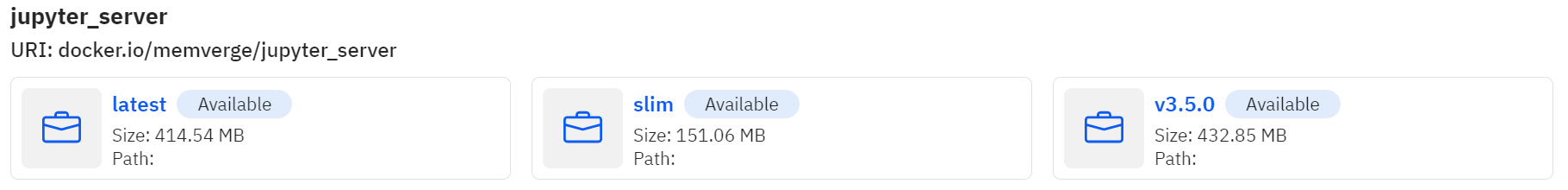

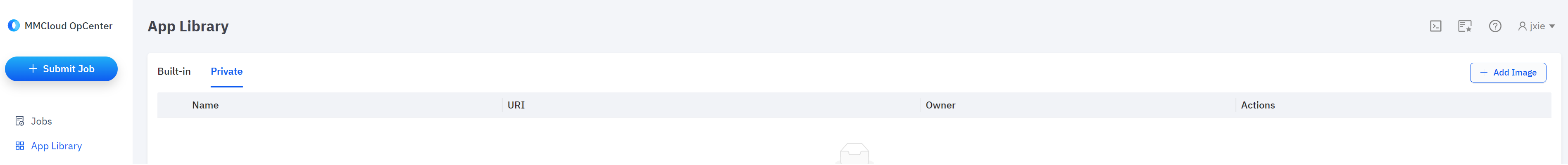

Deploy standard or custom images via our public and private App Library show below. Most apps are supported since Memory Machine supports any OCI-compliant container image.

More Visibility, Efficiency, and Performance = Better Research

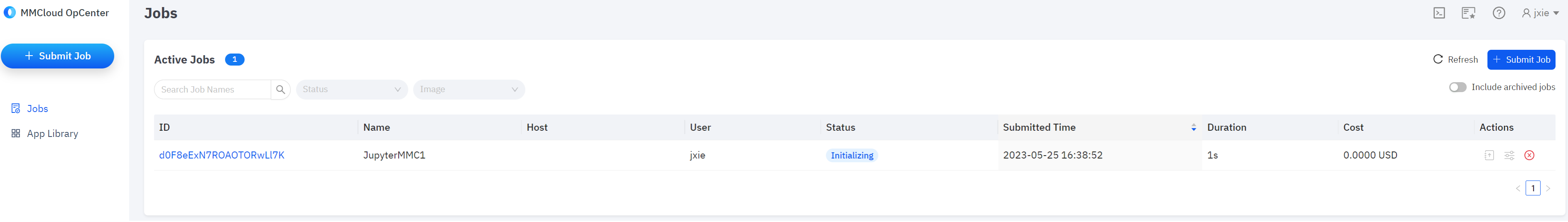

Shown below, Memory Machine Cloud monitors all users and Jupyter sessions easily and take actions such as terminate, migrate, and elastically change the underlying compute resources without losing state (user settings, unsaved work).

Memory Machine works for you as your cloud resource manager. It automatically provisions and deprovisions the associated cloud resources for “always on” and “batch” applications of Jupyter.

Endless Memory and Compute – Never Crash Due to Running “Out of Memory”

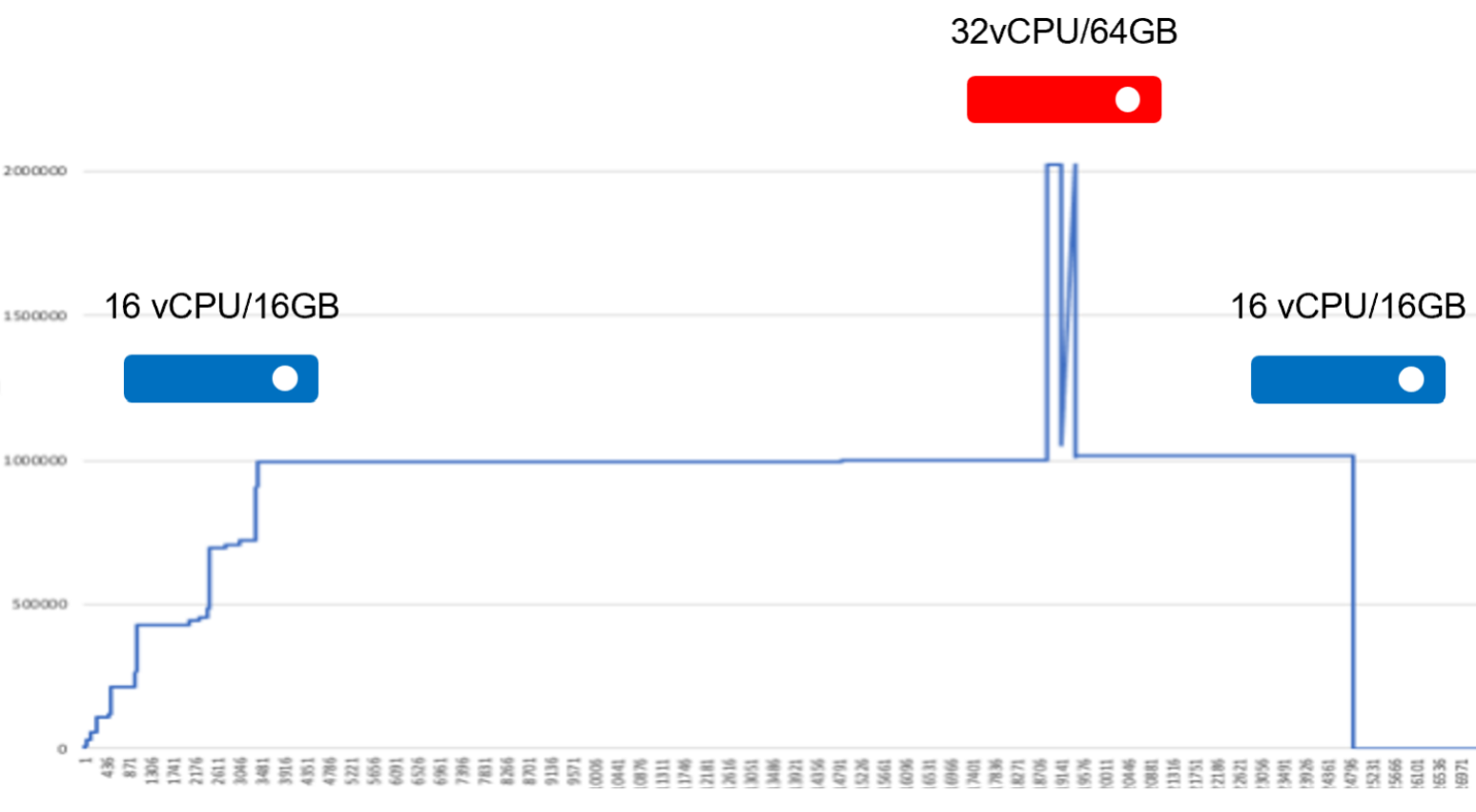

One of the most frustrating events is when your system crashes while running a computation that ran out of memory. With Memory Machine you can kick off a job and if more memory and CPU resources are needed the Jupyter instance can be automatically migrated to a larger machine without losing the progress of running jobs, unsaved work, and user settings.

In other words, Memory Machine can auto-scale your Jupyter IDE vertically in real-time both up and down based on actual usage. This ensures that you never have to restart to due out of memory errors and complete larger analyses faster. It also ensures you never overpay due to overprovisioning cloud resources. By not overprovisioning their compute and memory resources, customers have been able to save 20-30% on their cloud bills.

Run Jupyter on Spot VMs Continuously, Save at Least 60%, Guaranteed or Pay Nothing

Spot VMs in the cloud can cost up to 90% less than the On-Demand price for the same VM. The tradeoff is that AWS can reclaim a Spot VM at any time after a 2-minute notice is given, and on GCP an even shorter 30 second notice is provided.

When a reclaim happens, Memory Machine automatically checkpoints and recovers your Jupyter VM and automatically deploys on a new VM (Spot, if available or On-Demand, if not available). Memory Machine migrates ensures no progress is lost on running jobs and even un-saved user metadata is preserved and migrated to the new VM.

By using Memory Machine Cloud to deploy and manage your Jupyter environment, you can run on a 16 core, 128GB memory optimized EC2 instance 24/7 for only $130/month assuming an 80% discount on the Spot price vs. On-Demand (this translates to 60% less than a much smaller EC2 instance at On-Demand prices).

On

Demand

(8 vCPU, 64GB)

$$$

$330/month

Spot w/

Memory Machine

(16 vCPU, 128GB)

$

$130/month