CXL Use Case

Intelligent Tiering Accelerates Vector Databases

Q1’24 Memory Fabric Forum presentation of use case and Weaviate test results

Intelligent tiering with Memory Machine X Server QoS Feature

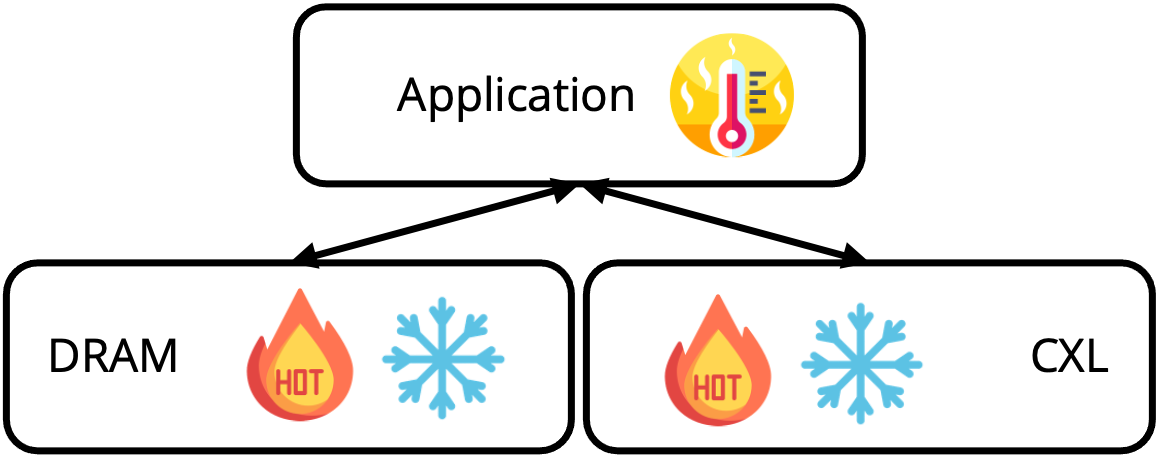

Our Memory Machine X QoS policies adapt to various application workloads with memory page movement to optimize latency or bandwidth. The goal of bandwidth-optimized memory placement and movement is to maximize the overall system bandwidth by strategically placing and moving data between DRAM and CXL memory based on the application’s bandwidth requirements.

The bandwidth policy engine will utilize the available bandwidth from all DRAM and CXL memory devices with a user-selectable ratio of DRAM to CXL to maintain a balance between bandwidth and latency.

The MemVerge QoS engine moves hot and cold data on DRAM and CXL through equal interleaving, weighted interleaving, random page selection, and intelligent page selection

Memory Machine X delivers higher QPS and lower latency with AI-native Weaviate AI-native vector database

Vector databases complement generative AI models by providing an external knowledge base for generative AI chatbots and by helping to ensure they provide trustworthy information.

Weaviate is an AI-native vector database used by developers to create intuitive and reliable AI-powered applications. Weaviate benchmark tests are available to measure queries per second and latency.

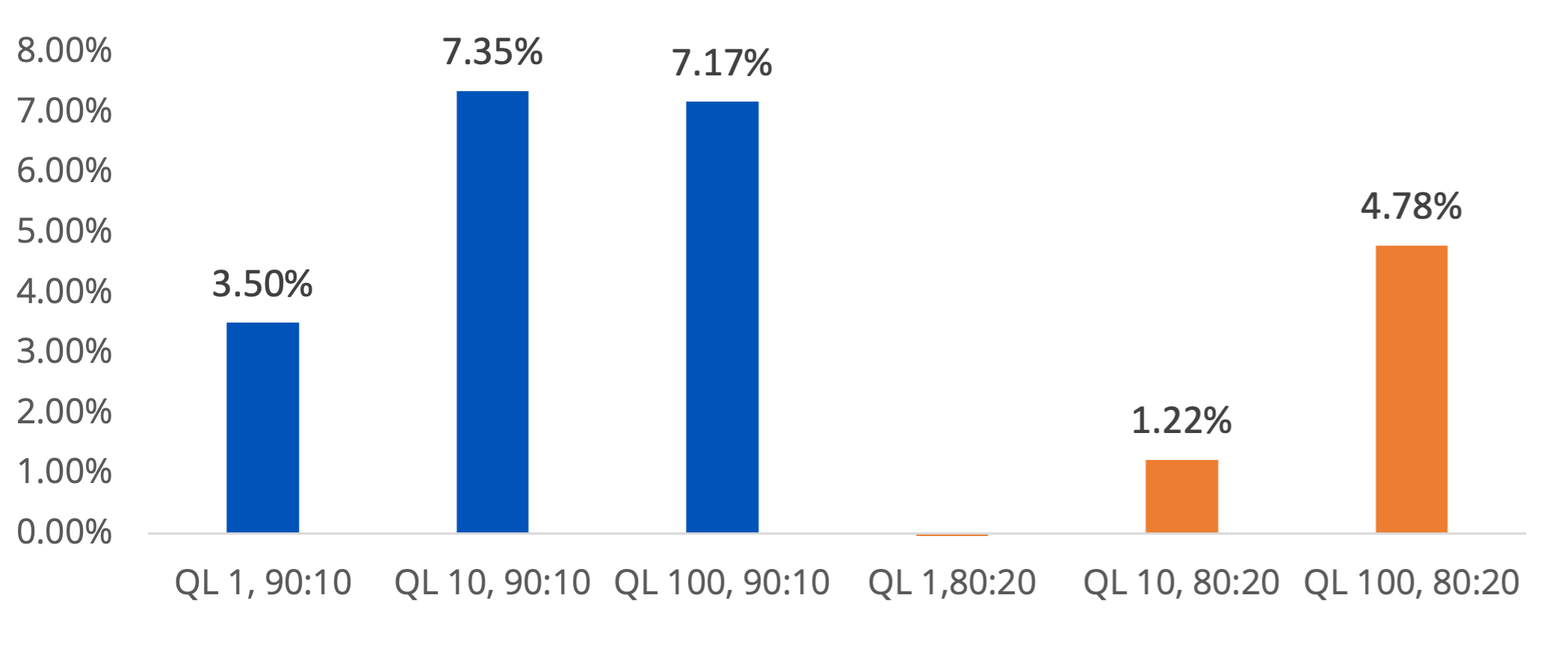

Shown below is QPS testing performed by MemVerge. Using 10% CXL and 20% CXL memory across different query limits (QL), Memory Machine X powered Weaviate to deliver up to 7.35% more queries per second.

Weaviate queries per second with Memory Machine X

(gist-960-Euclidian-128-32 – Queries per Second – EF512)

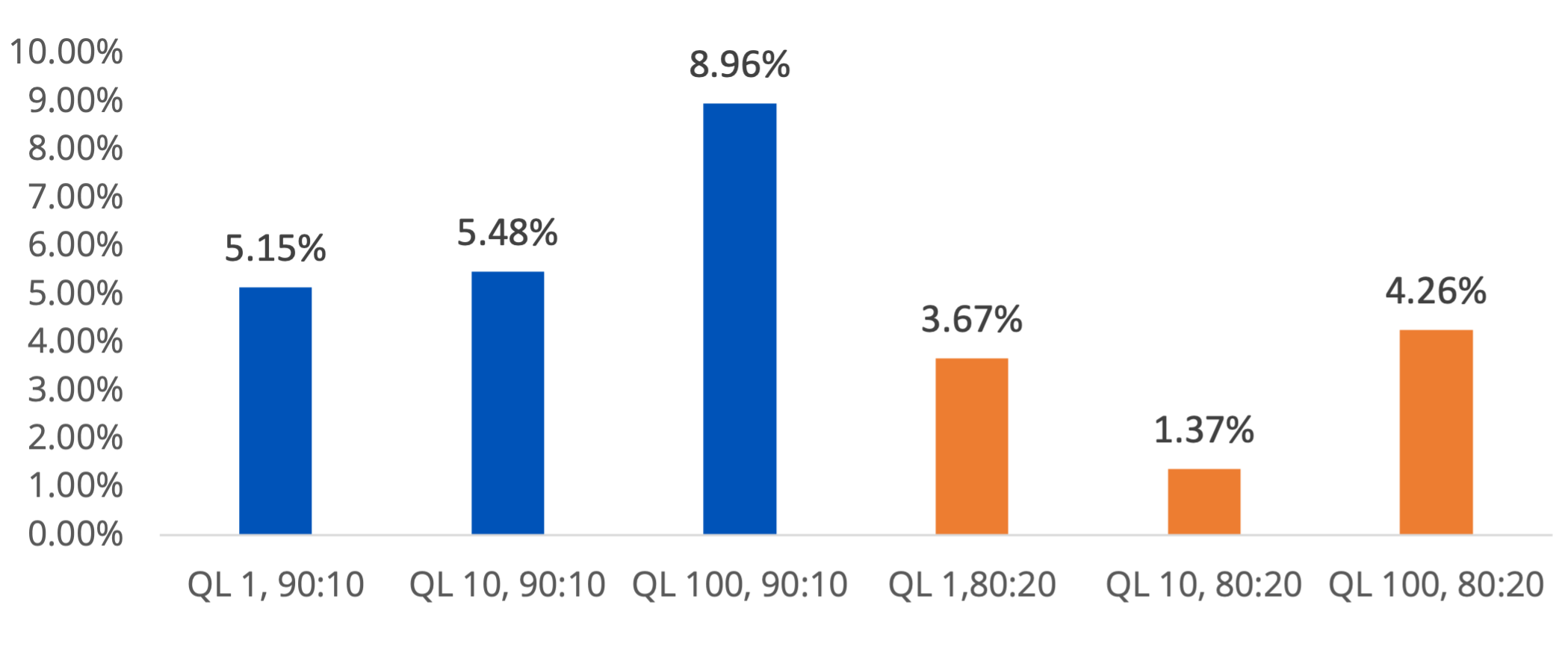

Shown below is latency testing performed by MemVerge. Using 10% CXL and 20% CXL memory across different query limits (QL), Memory Machine X enabled Weaviate to deliver up to 8.96% lower latency.

Weaviate latency with Memory Machine X

(gist-960-Euclidian-128-32 – P95 Latency (ms) – EF512)