Emulating CXL Shared Memory Devices in QEMU

by Ryan Willis and Gregory Price

Overview

In this article, we will accomplish the following:

- Building and installing a working branch of QEMU

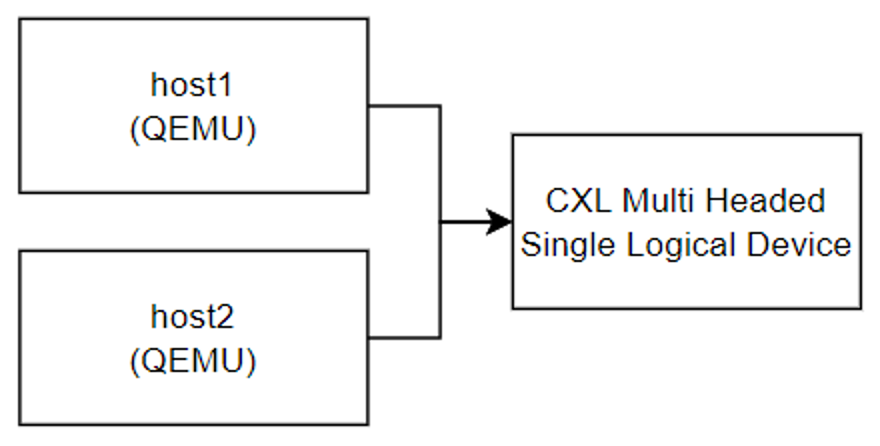

- Launching a pre-made QEMU lab with 2 hosts utilizing a shared memory device

- Accessing the shared memory region through a devdax device, and sharing information between the two hosts to verify that the shared memory region is functioning.

References:

-

Branch: cxl-2023-05-25

-

MemVerge’s CXL Shared Memory QEMU Image Package

-

https://app.box.com/shared/static/7iu9dok7me6zk29ed263uhpkd0tn4uqt.tgz

-

VM Login username/password: fedora/password

Build Prerequisites

This guide is written for a Fedora 38 host system; the below list of dependencies may have slightly different naming or versions on other distributions.

QEMU-CXL

As of 6/22/2023, the mainline QEMU does not have full support for creating CXL volatile memory devices, so we need to build a working branch.

This was the list of relevant dependencies we needed to install for a Fedora host. This list may not be entirely accurate, depending on your distribution and version.

sudo dnf group install "C Development Tools and Libraries" "Development Tools"

sudo dnf install golang meson pixman pixman-devel zlib zlib-devel python3

bzip2 bzip2-devel acpica-tools pkgconf-pkg-config libaio libaio-devel

liburing liburing-devel libzstd libzstd-devel libgudev ruby rubygem-ncursesw

libssh libssh-devel kernel-devel numactl numactl-devel libpmem libpmem-devel

libpmem2 libpmem2-devel daxctl daxctl-devel cxl-cli cxl-devel python3-sphinx

genisoimage ninja-build libdisk-devel parted-devel util-linux-core bridge-utils

libslirp libslirp-devel dbus-daemon dwarves perl

// Optional requirements

sudo dnf install liburing liburing-devel libnfs libnfs-devel libcurl libcurl-devel

libgcrypt libgcrypt-devel libpng libpng-devel

// Optional Fedora 37 or newer

sudo dnf install blkio blkio-devel

If the host system uses Ubuntu distributions, here is the list of dependencies to install (This might be slightly incomplete depending on specific systems, missing dependencies will be indicated during the configuration step of QEMU installation)

sudo apt-get install libaio-dev liburing-dev libnfs-dev

libseccomp-dev libcap-ng-dev libxkbcommon-dev libslirp-dev

libpmem-dev python3.10-venv numactl libvirt-dev

Johnathan Cameron is the QEMU maintainer of the CXL subsystem, we will use one of his checkpoint branches which integrate future work, such as volatile memory support.

git clone https://gitlab.com/jic23/qemu.git

cd qemu

git checkout cxl-2023-05-25

mkdir build

cd build

../configure --prefix=/opt/qemu-jic23 --target-list=i386-softmmu,x86_64-softmmu --enable-libpmem --enable-slirp

# At this step, you may need to install additional dependencies

make -j all

sudo make install

Our QEMU build is now installed at /opt/qemu-jic23 , to validate:

/opt/qemu-cxl-shared/bin/qemu-system-x86_64 --version

QEMU emulator version 8.0.50 (v6.2.0-12087-g62c0c95799)

Copyright (c) 2003-2022 Fabrice Bellard and the QEMU Project developers

Launching the Pre-Packaged CXL Memory Sharing QEMU Lab

First, download the MemVerge CXL Memory Sharing lab package, and unzip it. Running

wget -O memshare.tgz https://app.box.com/shared/static/7iu9dok7me6zk29ed263uhpkd0tn4uqt.tgz

tar -xzf ./memshare.tgz

cd memshare

Inside, you’ll find two QEMU images configured to share a memory region, various shell scripts launch these images, a README and the base fedora image that the sharing images were based on. Note: Similar to the memory expander lab, these images have a custom kernel installed.

$ ls

Fedora-Cloud-Base-38-1.6.x86_64.qcow2 README.md share1.sh share2.sh

launch.sh share1.qcow2 share2.qcow2

These images are configured with 4 vCPU, 4GB of DRAM, and a single 4GB CXL memory expander. A file-backed memory region is used to provide a shared memory region for the CXL memory expanders.

sudo /opt/qemu-cxl-shared/bin/qemu-system-x86_64

-drive file=./share2.qcow2,format=qcow2,index=0,media=disk,id=hd

-m 4G,slots=4,maxmem=8G

-smp 4

-machine type=q35,cxl=on

-daemonize

-net nic

-net user,hostfwd=tcp::2223-:22

-device pxb-cxl,id=cxl.0,bus=pcie.0,bus_nr=52

-device cxl-rp,id=rp0,bus=cxl.0,chassis=0,port=0,slot=0

-object memory-backend-file,id=mem0,mem-path=/tmp/mem0,size=4G,share=true

-device cxl-type3,bus=rp0,volatile-memdev=mem0,id=cxl-mem0

-M cxl-fmw.0.targets.0=cxl.0,cxl-fmw.0.size=4G

To launch the lab, simply execute launch.sh

$ ./launch.sh

VNC server running on 127.0.0.1:5904

VNC server running on 127.0.0.1:5905

The instances should now be accessible via SSH via ports 2222 and 2223 respectively.

It can take some time for QEMU to boot. If you cannot SSH in right away, wait a few minutes and try again before troubleshooting.

To access host 1:

-

ssh fedora@localhost -p 2222

-

password is ‘password’

and for host 2:

-

ssh fedora@localhost -p 2223

-

password is ‘password’

Setting up DAX Devices on QEMU Instances

In the ‘fedora’ user’s home directory on each instance, you will find two shell scripts, create_region.sh and dax_mode.sh. To create a DAX device on the instance to use with memory sharing, first execute the create_region.sh script.

[fedora@localhost ~]$ ./create_region.sh

{

"region":"region0",

"resource":"0x390000000",

"size":"4.00 GiB (4.29 GB)",

"type":"ram",

"interleave_ways":1,

"interleave_granularity":4096,

"decode_state":"commit",

"mappings":[

{

"position":0,

"memdev":"mem0",

"decoder":"decoder2.0"

}

]

}

cxl region: cmd_create_region: created 1 region

onlined memory for 1 device

If successful, this will create a memory region for the emulated CXL device.

Next, execute dax_mode.sh.

[fedora@localhost ~]$ ./dax_mode.sh

offlined memory for 1 device

[

{

"chardev":"dax0.0",

"size":4294967296,

"target_node":1,

"align":2097152,

"mode":"devdax"

}

]

reconfigured 1 device

Success! We now have a DAX device dax0.0 that we can use to access shared memory! Follow this process on both hosts, and you’re ready to test the shared memory.

Testing Shared Memory Across QEMU Instances

After setting up DAX devices on both hosts, we can now run a simple test that is included in the home directory of the instances.

On both instances, navigate to the daxtest directory and run Make to build the simple dax reader & writer tests.

[fedora@localhost daxtest]$ make

gcc -o daxreader daxreader.c

These programs mmap the /dev/dax0.0 device to directly access the shared memory region.

Now, let’s send a message from instance 1 to instance 2 using daxreader and daxwriter.

daxreader/daxwriter must be executed with sudo to function properly

On instance1, run daxwriter to send the message.

[fedora@localhost daxtest]$ sudo ./daxwriter "Hello from instance 1!"

Paragraph written to DAX device successfully.

On instance 2, to read the message, run daxreader.

[fedora@localhost daxtest]$ sudo ./daxreader

Paragraph read from DAX device:

Hello from instance 1!

We were able to retrieve the shared memory message on the emulated CXL device on the second instance.

A word on Cache Coherency

These images are configured to operate on the same host, using a file-backed memory region as the source of shared memory. As a result, this memory is cache coherent. That means this emulated environment represents a CXL 3.0 Type-3 Device with “Hardware-Controlled Cache Coherency”.

A similar mechanism can be achieved with the shared memory QEMU device:

-object memory-backend-file,size=1G,mem-path=/dev/hugepages/my-shmem-file,share,id=mb1

-device ivshmem-plain,memdev=mb1

Users should not assume this type of hardware environment will be available on CXL 2.0 systems. Devices presenting distributed shared memory in the CXL 2.0 timeframe will not provide hardware-controlled cache coherency, and instead will require software-controlled cache coherency.

MemVerge has launched the GISMO (Global IO-Free Shared Memory Objects) initiative to provide software-controlled cache coherency for a subset of use cases. If you are interested in exploring these use cases with us, please contact us at c[email protected].

A word on Security

This shared memory /dev/dax device provides raw access to memory. Both connected hosts are free to utilize this memory, and there is no mechanism which enables one host to restrict the other’s access. As a result, this shared memory region should only be made accessible to programs through very tightly controlled interfaces.

Future Work

Stay tuned for how to implement software-controlled memory-pooling on top of a simple multi-headed memory expander device as described here!